Leveraging Vision Language Models for Custom Data Annotation: A Case Study in PPE Analysis

Introduction

In the rapidly evolving landscape of AI solutions for process industries, one of the most significant challenges faced by service providers is the need to develop custom models for highly specialized client problems. These problems often require extensive datasets with detailed annotations, yet manual annotation is time-consuming and resource-intensive. Additionally, due to data confidentiality concerns, using public annotation tools or services is frequently not an option.

Enter Vision Language Models (VLMs) – a promising solution that combines computer vision and natural language processing capabilities. While VLMs have gained attention for their performance in general image recognition and visual question answering, their potential as annotation assistants for specialized industrial applications remains largely unexplored. In this blog post, we’ll examine how VLMs can revolutionize the custom data annotation process through a practical case study in Personal Protective Equipment (PPE) analysis.

Our exploration began with a simple question: Could VLMs effectively analyze and annotate specialized industrial imagery using natural language prompts? To test this, we used a straightforward prompt: “Please describe in particular, the protective equipment being worn, if present.” Our goal was to evaluate whether VLMs could not only detect PPE but also distinguish between subtle variations – different types of vests, masks, and safety glasses – across construction, medical, and mining domains.

Let’s explore two distinct approaches for leveraging VLMs in this context, each offering unique insights into their potential as annotation tools for specialized industrial applications.

More details about experiment and code can be found in out GitHub Repo.

Phase 1: PPE Analysis using OllaMa and LLaVa-13B/LLaMa3.2-Vision

In the first phase of our exploration, we’ll focus on leveraging the OllaMa library to work with two powerful VLM models: LLaVa-13B and LLaMa3.2-Vision.

OllaMa is an open-source library that provides a user-friendly interface for interacting with large language models, including VLMs. By using OllaMa, we can easily load and utilize these advanced models without having to worry about the underlying complexities of model architecture, training, and deployment.

We have a Jupyter Notebook which showcases the step-by-step process of leveraging the LLaVa-13B and LLaMa3.2-Vision models for PPE analysis. More details on Setup and using the file can be found in GitHub.

Example Output from LLaVa-13B

Below is one of the examples output from LLava-13B

1 Example from LLaVa-13B for one image

Same example from LLaVa 7B

2 Example from LLaVa-7B for same Image

Key Takeaways from the OllaMa-based Phase

Beyond the specific PPE detection capabilities, this phase revealed important insights about using VLMs for custom annotation tasks:

- Rapid Prototyping: The OllaMa library enables quick experimentation with VLMs for different annotation scenarios, allowing teams to quickly assess whether VLMs are suitable for their specific use case.

- Natural Language Interface: The ability to use simple prompts makes VLMs accessible to domain experts who may not have extensive computer vision expertise.

- Annotation Consistency: While not perfect, VLMs showed promising consistency in identifying subtle variations in PPE types, suggesting potential for reducing annotation variability.

- Limitations in Specialized Contexts: The quantized nature of these models highlighted the importance of carefully evaluating VLM performance on domain-specific elements.

Below example is the output of Ovis1.6-Gemma2-9B model.

3 Example from Ovis1.6-Gemma2-9B Model

3 Example from Ovis1.6-Gemma2-9B ModelKey Takeaways from the Transformers-based Phase

The Transformers-based approach revealed additional insights about VLMs as annotation tools:

- Annotation Flexibility: The ability to experiment with different VLM models allows teams to find the best fit for their specific annotation requirements and domain constraints.

- Integration Potential: The robust Transformers ecosystem makes it easier to integrate VLM-based annotation into existing data processing pipelines.

- Performance Trade-offs: While potentially slower, these models demonstrated higher accuracy in detecting subtle variations, which is crucial for specialized annotation tasks.

- Customization Options: The framework’s flexibility enables teams to fine-tune the annotation process for their specific industrial context.

Experimental Evaluation and Results Analysis

To rigorously assess the VLMs’ capabilities for industrial annotation tasks, we conducted a comprehensive manual evaluation of their performance on our custom PPE dataset. This evaluation was particularly important given the lack of traditional ground truth annotations, reflecting real-world scenarios where existing annotations may not be available.

Dataset Categorization and Evaluation Metrics

We categorized the test images into three complexity levels to better understand the models’ performance across different scenarios. We have 3 image complexity levels “Complex”, “Moderate” and “Simple”.

Given the qualitative nature of VLM outputs and the absence of confidence scores, we developed a three-tier accuracy assessment framework, where we are categorizing them into “High Accuracy”, “Moderate Accuracy” and “Low Accuracy”.

Further details on Dataset and Evaluation matrix can be found on our GitHub Evaluation

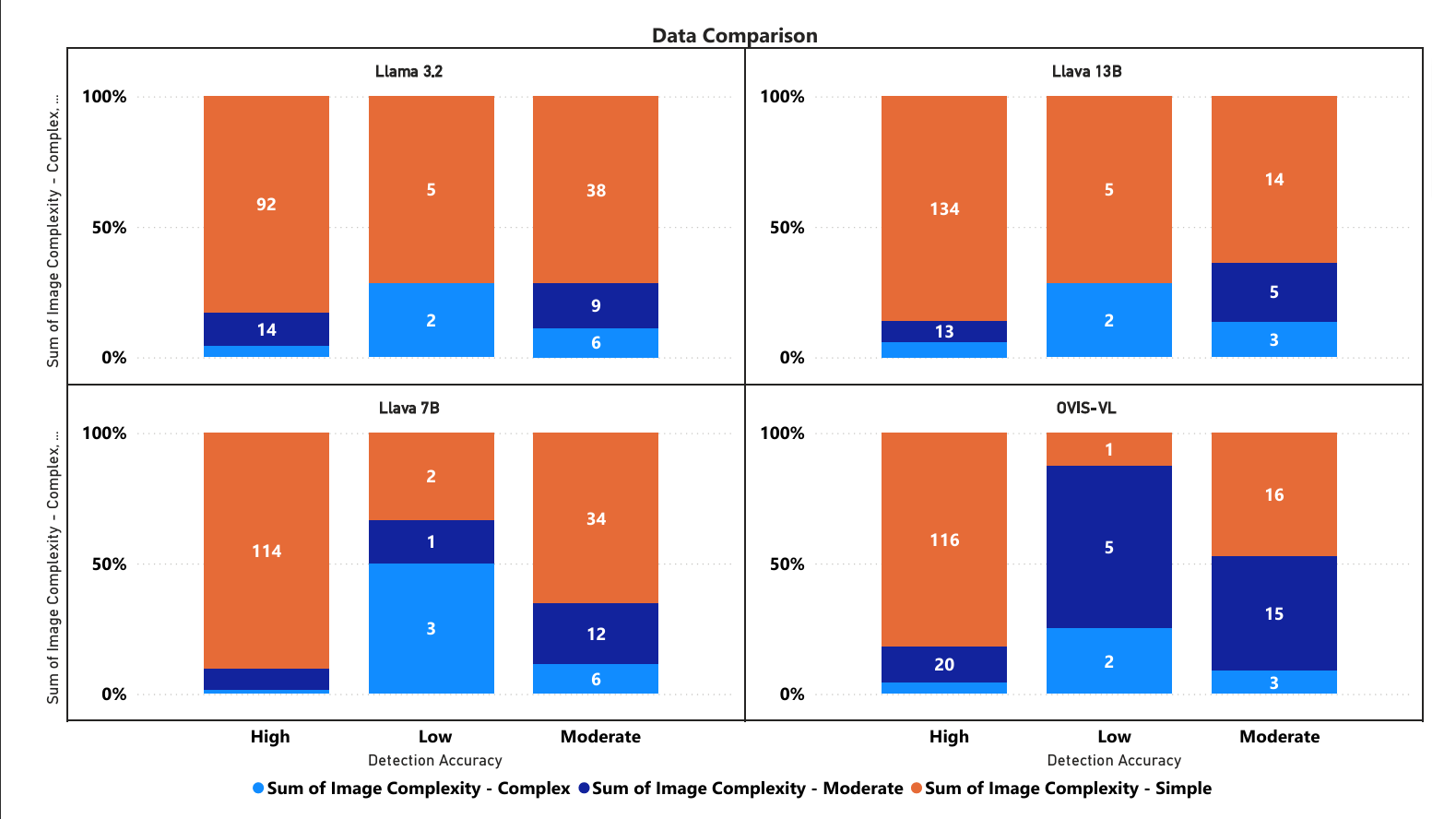

Performance Visualization

We have consolidated the results from different models and we created Stacked Bar Chart which shows the accurate comparison between the models.

4 Consolidated Graph for LLaMa3.2-Vision, LLaVa-131B, LLaVa-7B, Ovis1.6-Gemma2-9B

Observations:

- All models handle simple images (orange) well at high detection accuracy

- Llava 13B shows strongest performance with 134 simple image detections at high accuracy

- Complex images (blue) generally have lower detection counts across all models

- Low detection accuracy category shows smallest total numbers across models

- OVIS-VL appears to handle moderate complexity (dark blue) images better than others

The y-axis shows percentages stacked to 100%, while numbers inside bars represent actual counts. Each model’s performance is evaluated based on how well it handles different image complexities at varying detection accuracy levels.

Key Insights from Evaluation

- Complexity Impact

- All models perform best with simple images at high detection accuracy

- Performance decreases notably with complex images

- Simple images dominate the high accuracy category across all models

- Model-Specific Strengths

- LLaVa 13B shows superior performance with 134 simple image detections at high accuracy

- OVIS-VL handles moderate complexity better than other models

- LLaVa 7B shows balanced performance across complexity levels

- Detection Accuracy Patterns

- High accuracy category shows largest detection counts

- Low accuracy category consistently shows lowest numbers

- Moderate accuracy shows varied performance across models

- Annotation Quality

- High accuracy results showed potential for VLMs in automated annotation

- Medium accuracy cases often required minimal human correction

- Low accuracy instances highlighted areas needing human oversight

- Practical Implications

- Results suggest VLMs could significantly reduce manual annotation effort

- Model selection should consider specific use case requirements

- Hybrid approach combining VLM capabilities with human verification may be optimal

These evaluation results demonstrate both the potential and limitations of using VLMs for industrial annotation tasks. While no single model achieved perfect performance across all scenarios, the results suggest that VLMs can serve as valuable tools in the annotation pipeline, particularly for initial annotation passes that can be refined by human experts.

Comparing the Transformers-based Approach with OllaMa Approach

By exploring these Transformers-based scripts, we can compare the performance, strengths, and tradeoffs of this approach compared to the OllaMa-based phase:

| Aspect | Transformers-based Approach | OllaMa-based Approach |

| Model Diversity | Wide range of models available (e.g., Ovis1.6-Gemma2-9B, Qwen2-VL-9B), each with unique characteristics | Limited to specific models optimized for OllaMa (LLaVa-13B, LLaMa3.2-Vision) |

| Performance | – Higher accuracy – Better model robustness | – Faster detection capabilities – Lower accuracy – Quicker processing time |

| Flexibility | – More extensible framework – Easier integration with larger systems – Greater customization options | – Simpler implementation – Faster deployment – Limited customization |

| Ease of Use | – Established ecosystem – Comprehensive documentation – More complex setup | – User-friendly interface – Simpler setup process – Less technical overhead |

| Processing Time (185 images) | 900-1200 minutes | 15-20 minutes |

Conclusion

Our exploration of VLMs through the lens of PPE analysis demonstrates their broader potential as tools for custom data annotation in industrial applications. While traditional annotation methods often require substantial manual effort and raise confidentiality concerns, VLMs offer a promising alternative that combines the flexibility of natural language interpretation with the consistency of automated systems.

The approaches detailed in this blog post – using both OllaMa and Transformers-based implementations – showcase how VLMs can be leveraged to address the challenging task of annotating specialized industrial data. While our case study focused on PPE detection, the insights gained are applicable to a wide range of industrial annotation challenges, from quality control to process monitoring.

As we continue to push the boundaries of what’s possible with VLMs, we envision them becoming an integral part of the industrial AI solution development process, particularly in scenarios where traditional annotation methods are impractical or impossible. The techniques and insights shared here provide a foundation for teams looking to explore VLM-based annotation in their own specialized domains, potentially transforming how we approach custom model development for industrial applications.